How to Participate

We recommend you start by solving the basic problems and computing ¯Ptotal or σθθ as described here. Compare your results with the reference solutions that are provided for these quantities for basic validation of your approach (problem definition, solution, postprocessing, ...). Then, compute the remaining quantities of interest for the basic problems and organize the computed results and performance data from your simulations as described below. Repeat this process for the moderate problems, hard problems, and challenge problems. We expect participants to submit results from multiple runs for each benchmark problem (e.g., with increasing mesh density or basis function order). Additionally, for parallel algorithms, participants are encouraged to submit results corresponding to a high-speed (i.e., large number of processors) and a high-efficiency (i.e., minimum number of processors) run.

Once you have prepared all of this data, contact us with a link containing your results. We will download and archive your data, calculate the errors err¯Ptotal, err¯¯PL1, and errσθθL2 relative to the reference results, and normalize the reported computational costs. We will send you your performance results and confirm that you would like them displayed on this website. Once confirmed, the method will be added to the list of benchmarked methods and its performance results will be reported. As the complete reference results are not shared with the participants, this is a semi-blind benchmarking methodology.

Data Organization for Submission

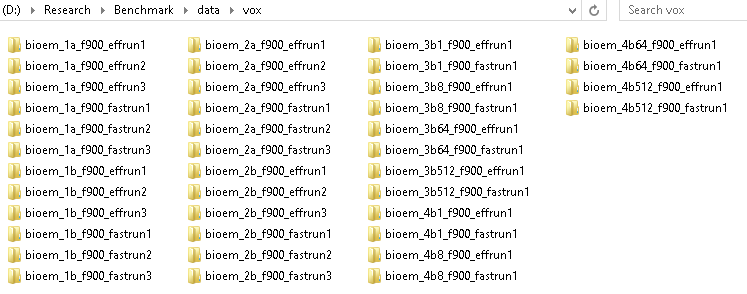

To be considered for inclusion in the benchmark, the submitted data should be organized into multiple directories and files using a specific naming convention and file format for each run/simulation. This sample submission for benchmark problem 1a shows the directory structure, file names, and file formats. We ask that you submit the following files for each run/simulation:

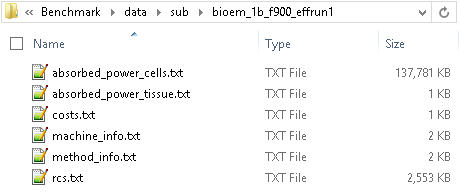

- method_info.txt which contains a description of the method used as shown here and any references to publications

- costs.txt which contains the computational costs of the run: wall-clock time (separated into preprocess, solve, and postprocess) and the maximum memory requirement (per node among all nodes)

- machine_info.txt which contains information about the hardware the results were obtained on, including its theoretical peak Flop/s performance per node (along with processor information) and whether it was a serial or parallel run

- absorbed_power_cells.txt, absorbed_power_tissue.txt, rcs.txt which contain the calculated quantities of interest (in the specified format)

A sample set of directories. Notice the naming scheme.

Contents of one run directory.

Frequently Asked Questions

- What if my method cannot solve all of the benchmark problems?

- The benchmark consists of models of increasing size and complexity, which gives rise to the problems ranging from basic and moderate to hard and challenge problems. As the categories indicate, we do not expect every method to be suitable for solving every problem. Moreover, some of the quantities of interest required by the benchmark are particularly difficult to compute, see next question. Nevertheless, the benchmark problems are designed to exercise features of simulation methods that are most relevant to the solution of bioelectromagnetic problems. Thus, we encourage you to develop methods to solve as many problems and compute as many quantities of interest as possible. To be included in the benchmarked methods list, the submission must include either the bistatic radar cross-section or the cell-averaged time-averaged absorbed power density.

- What if I cannot calculate all of the quantities of interest?

- Depending on the method used, significant development and computational effort might be needed to postprocess the solutions found from the simulations to compute the relevant quantities of interest in the benchmark suite. To be included in the benchmarked methods list, the submission must include either the bistatic radar cross-section or the cell-averaged time-averaged absorbed power density.

- Do I have to submit my code and/or all of the parameters I used for my run?

- No. We do recommend you keep a record of the code version, execution environment, and inputs to be able to replicate your own results. While this benchmark hopes to increase the verifiability of computational research results and can help bridge the credibility gap faced by computational scientists and engineers [4], we do not consider replicability to be the same as reproducibility in scientific computing [15], [16].

- What do you think about reproducible research in computational science and engineering?

- See this article and presentation.